Introduction

What we want to achieve

You fire up your agent of choice through Symposium. It has a more collaborative style, remembers the way you like to work. It knows about your dependencies and incorporates advice supplied by the crate authors on how best to use them. You can install extensions that transform the agent — new skills, new MCP servers, or more advanced capabilities like custom GUI interfaces and new ways of working.

AI the Rust Way

Symposium brings Rust’s design philosophy to AI-assisted development.

Leverage the wisdom of the crowd crates.io

Rust embraces a small stdlib and a rich crate ecosystem. Symposium brings that philosophy to AI: your dependencies can teach your agent how to use them. Add a crate, and your agent learns its idioms, patterns, and best practices.

Beyond crate knowledge, we want to make it easy to publish agent extensions that others can try out and adopt just by adding a line to their configuration — the same way you’d add a dependency to Cargo.toml.

Stability without stagnation

Rust evolves quickly and agents’ training data goes stale. Symposium helps your agent take advantage of the latest Rust features and learn how to use new or private crates — things not found in its training data.

We provide guides and context that keep models up-to-date, helping them write idiomatic Rust rather than JavaScript-in-disguise.

Open, portable, and vendor neutral

Open source tools that everyone can improve. Build extensions once, use them with any ACP-compatible agent. No vendor lock-in.

How to install

Install in your favorite editor

Installing from source

Clone the repository and use the setup tool:

git clone https://github.com/symposium-dev/symposium.git

cd symposium

cargo setup --all

Setup options

| Option | Description |

|---|---|

--all | Install everything (ACP binaries, VSCode extension, Zed config) |

--acp | Install ACP binaries only |

--vscode | Build and install VSCode extension |

--zed | Configure Zed editor |

--dry-run | Show what would be done without making changes |

Options can be combined:

cargo setup --acp --zed # Install ACP binaries and configure Zed

For editors other than VSCode and Zed, you need to manually configure your editor to run symposium-acp-agent run.

VSCode and VSCode-based editors

Step 1: Install the extension

Install the Symposium extension from:

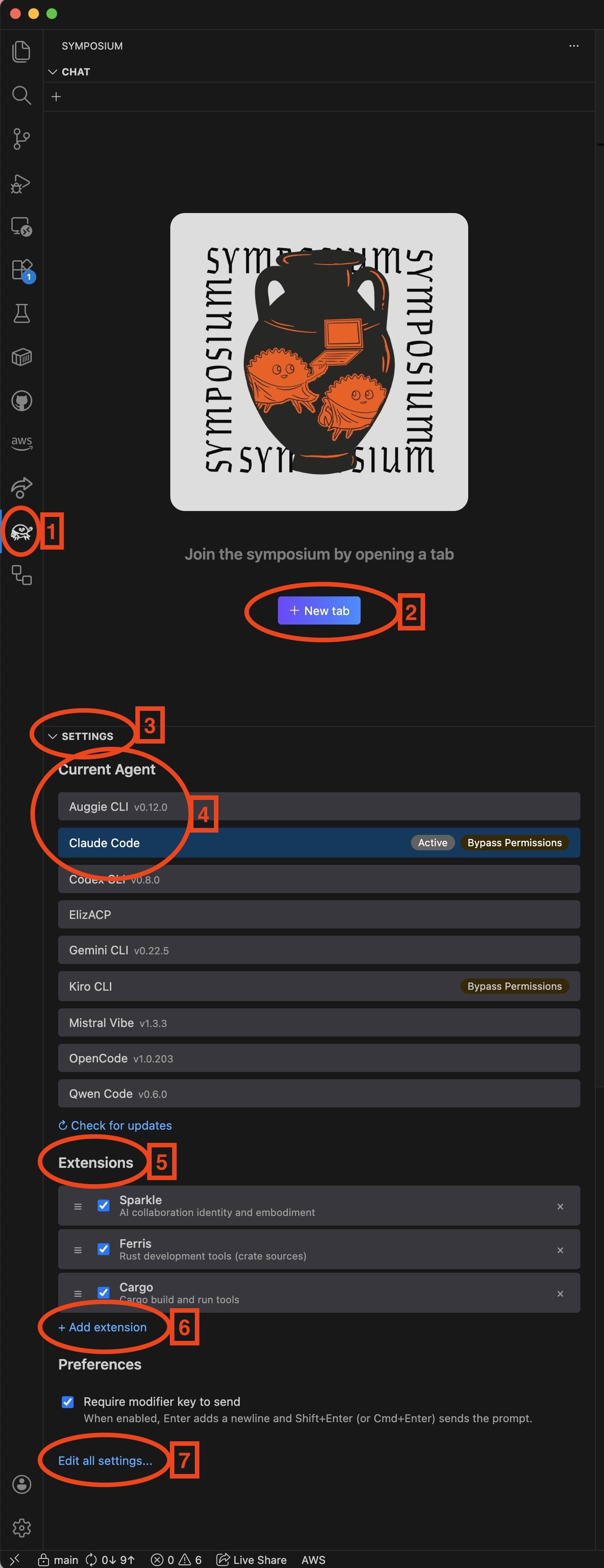

Step 2: Activate the panel and start chatting

- Activity bar icon — Click to open the Symposium panel

- New tab — Start a new conversation with the current settings

- Settings — Expand to configure agent and extensions

- Agent selector — Choose which agent to use (Claude Code, Gemini CLI, etc.)

- Extensions — Enable MCP servers that add capabilities to your agent

- Add extension — Add custom extensions

- Edit all settings — Access full settings

Custom Agents

The agent selector shows agents from the Symposium registry. To add a custom agent not in the registry, use VS Code settings.

Open Settings (Cmd/Ctrl+,) and search for symposium.agents. Add your custom agent to the JSON array:

"symposium.agents": [

{

"id": "my-custom-agent",

"name": "My Custom Agent",

"distribution": {

"npx": { "package": "@myorg/my-agent" }

}

}

]

Distribution Types

| Type | Example | Notes |

|---|---|---|

npx | { "npx": { "package": "@org/pkg" } } | Requires npm/npx installed |

pipx | { "pipx": { "package": "my-agent" } } | Requires pipx installed |

executable | { "executable": { "path": "/usr/local/bin/my-agent" } } | Local binary |

Your custom agent will appear in the agent selector alongside registry agents.

Other Editors

Symposium works with any editor that supports ACP. See the editors on ACP page for a list of supported editors and how to install ACP support.

Installation

- Install ACP support in your editor of choice

- Install the Symposium agent binary:

or from source:cargo binstall symposium-acp-agentcargo install symposium-acp-agent - Configure your editor to run:

~/.cargo/bin/symposium-acp-agent run

Instructions for configuring ACP support in common editors can be found here:

Configuring Symposium

On first run, Symposium will ask you a few questions to create your configuration file at ~/.symposium/config.jsonc:

Welcome to Symposium!

No configuration found. Let's set up your AI agent.

Which agent would you like to use?

1. Claude Code

2. Gemini CLI

3. Codex

4. Kiro CLI

Type a number (1-4) to select:

After selecting an agent, Symposium creates the config file and you can restart your editor to start using it.

Manual Configuration

You can edit ~/.symposium/config.jsonc directly for more control. The format is:

{

"agent": "npx -y @zed-industries/claude-code-acp",

"proxies": [

{ "name": "sparkle", "enabled": true },

{ "name": "ferris", "enabled": true },

{ "name": "cargo", "enabled": true }

]

}

Fields:

-

agent: The command to run your downstream AI agent. This is passed to the shell, so you can use any command that works in your terminal. -

proxies: List of Symposium extensions to enable. Each entry has:name: The extension nameenabled: Set totrueorfalseto enable/disable

Built-in Extensions

| Name | Description |

|---|---|

sparkle | AI collaboration identity and memory |

ferris | Rust crate source fetching |

cargo | Cargo build/test/check commands |

Using Symposium

Symposium focuses on creating the best environment for Rust coding through Agent Extensions - MCP servers that add specialized tools and context to your agent.

Symposium is built on the Agent Client Protocol (ACP), which means the core functionality is portable across editors and environments. VSCode is the showcase environment with experimental GUI support, but the basic functionality can be configured in any ACP-supporting editor.

The instructions below use the VSCode extension as the basis for explanation.

Selecting an Agent

To select an agent, click on it in the agent picker. Symposium will download and install the agent binary automatically.

Some agents may require additional tools to be available on your system:

- npx - for agents distributed via npm

- uvx - for agents distributed via Python

- cargo - for agents distributed via crates.io (uses

cargo binstallif available, falls back tocargo install)

Symposium checks for updates and installs new versions automatically as they become available.

For adding custom agents not in the registry, see VSCode Installation - Custom Agents.

Managing Extensions

Extensions add capabilities to your agent. Open the Settings panel to manage them.

In the Extensions section you can:

- Enable/disable extensions via the checkbox

- Reorder extensions by dragging the handle

- Add extensions via the “+ Add extension” link

- Delete extensions from the list

Order matters - extensions are applied in the order listed. The first extension is closest to the editor, and the last is closest to the agent.

When adding extensions, you can choose from:

- Built-in extensions (Sparkle, Ferris, Cargo)

- Registry extensions from the shared catalog

- Custom extensions via executable, npx, pipx, cargo, or URL

Builtin Extensions

Symposium ships with three builtin extensions:

- Sparkle - AI collaboration framework that learns your working patterns

- Ferris - Rust crate source inspection

- Cargo - Compressed cargo command output

Built-in Extensions

Symposium ships with three built-in extensions that enhance your agent’s capabilities for Rust development.

- Sparkle - AI collaboration framework that learns your working patterns

- Ferris - Rust crate source inspection

- Cargo - Compressed cargo command output

See Using Symposium for how to enable, disable, and reorder extensions.

Sparkle

Sparkle is an AI collaboration framework that transforms your agent from a helpful assistant into a thinking partner. It learns your working patterns over time and maintains context across sessions.

Quick Reference

| What | How |

|---|---|

| Activate | Automatic when extension is enabled |

| Teach a pattern | Say “meta moment” during a session |

| Save session | Use /checkpoint before ending |

| Local state | .sparkle-space/ (add to .gitignore) |

| Persistent learnings | ~/.sparkle/ |

How It Works

Automatic activation - When the Sparkle extension is enabled, it activates automatically when you create a new thread. No manual setup required.

Local workspace state - Sparkle creates a .sparkle-space/ directory in your workspace to store working memory and session checkpoints. Add this to your .gitignore.

Persistent learnings - Pattern anchors and collaboration insights are stored in ~/.sparkle/ and carry across all your workspaces.

Pattern anchors - These are exact phrases that recreate collaborative patterns. Sparkle learns these over time as you work together, capturing what works well in your collaboration style.

Teaching patterns - During a session, say “meta moment” to pause and examine what’s working. Sparkle will capture the insight as a pattern anchor or collaboration evolution that future sessions can build on.

Closing out - Use /checkpoint to save session learnings before ending. This preserves your progress and creates continuity for the next session.

Learn More

For full documentation on Sparkle’s collaboration patterns and identity framework, see the Sparkle documentation.

Ferris

Ferris provides tools for inspecting Rust crate source code, helping your agent understand actual implementations rather than guessing at APIs.

Quick Reference

| What | How |

|---|---|

| Fetch crate sources | Agent uses crate_sources tool |

| Check workspace version | Automatic - defaults to version in your Cargo.toml |

| Specify version | Agent can request specific versions or semver ranges |

How It Works

When your agent needs to understand how a crate works, Ferris can fetch the source code directly from crates.io. This is useful when:

- Working with an unfamiliar crate

- Checking exact API signatures

- Understanding internal implementation details

- Finding usage examples in the crate’s own code

Tips

Encourage source checking - If Claude seems uncertain about a crate’s API or is making incorrect assumptions, prompt it to “check the sources” for that crate. This often leads to more accurate code.

Version awareness - Ferris automatically uses the crate version from your workspace’s Cargo.toml. If you need a different version, you can ask for a specific version or semver range.

Future Plans

Ferris is a work in progress. Future versions will include guidance on strong Rust coding patterns to help your agent write more idiomatic Rust.

Cargo

Cargo provides tools for running common cargo commands with compressed output, helping your agent save context and focus on what matters.

Quick Reference

| What | How |

|---|---|

| Build | Agent uses cargo build tool |

| Run | Agent uses cargo run tool |

| Test | Agent uses cargo test tool |

How It Works

Instead of running raw cargo commands through bash, your agent can use Cargo’s specialized tools. These tools:

- Compress output - Filter and summarize cargo’s verbose output to highlight errors, warnings, and key information

- Save context - Reduce token usage by removing noise, leaving more room for actual problem-solving

- Focus attention - Present the most important output first so the agent can quickly identify issues

Why Not Just Bash?

Raw cargo build output can be verbose, especially with many dependencies or detailed error messages. The Cargo extension processes this output to extract what the agent actually needs to see, making it more efficient at diagnosing and fixing issues.

Creating Agent Extensions

A Symposium agent extension is an ACP (Agent Client Protocol) proxy that sits between the client and the agent. Proxies can intercept and transform messages, inject context, provide MCP tools, and coordinate agent behavior. Agent extensions are typically distributed as Rust crates on crates.io.

Basic Structure

Your extension crate should:

- Implement an ACP proxy using the

sacpcrate - Produce a binary that speaks ACP over stdio

- Include Symposium metadata in Cargo.toml

See the sacp cookbook on building proxies for implementation details and examples.

Cargo.toml Metadata

Add metadata to tell Symposium how to run your extension:

[package]

name = "my-extension"

version = "0.1.0"

description = "Help agents work with MyLibrary"

[package.metadata.symposium]

# Optional: specify which binary if your crate has multiple

binary = "my-extension"

# Optional: arguments to pass when spawning

args = []

# Optional: environment variables

env = { MY_CONFIG = "value" }

The name, description, and version come from the standard [package] section.

Testing Your Extension

Before publishing:

- Install locally:

cargo install --path . - Test with Symposium: add to your local config and verify it loads correctly

- Check ACP compliance: ensure your proxy handles

proxy/initializecorrectly

Recommending Agent Extensions

There are two ways to recommend agent extensions to users:

- Central recommendations - submit to the Symposium recommendations registry

- Crate metadata - add recommendations directly in your crate’s Cargo.toml

Extension Sources

Extensions are referenced using a source field:

| Source | Syntax | Description |

|---|---|---|

| crates.io | source.crate = "name" | Rust crate installed via cargo |

| ACP Registry | source.acp = "id" | Extension from the ACP registry |

| Direct URL | source.url = "https://..." | Direct link to extension.json |

Central Recommendations

Submit a PR to symposium-dev/recommendations adding an entry:

[[recommendation]]

source.crate = "my-extension"

when-using-crate = "my-library"

# Or for multiple trigger crates:

[[recommendation]]

source.crate = "my-extension"

when-using-crates = ["my-library", "my-library-derive"]

This tells Symposium: “If a project depends on my-library, suggest my-extension.”

Users can also add their own local recommendation files for internal/proprietary extensions.

Crate Metadata Recommendations

If you maintain a library, you can recommend extensions directly in your Cargo.toml. Users of your crate will see these suggestions in Symposium.

Shorthand Syntax

For crates.io extensions:

[package.metadata.symposium]

recommended = ["some-extension", "another-extension"]

Full Syntax

For extensions from other sources:

# Recommend a crates.io extension

[[package.metadata.symposium.recommended]]

source.crate = "some-extension"

# Recommend an extension from the ACP registry

[[package.metadata.symposium.recommended]]

source.acp = "some-acp-extension"

# Recommend an extension from a direct URL

[[package.metadata.symposium.recommended]]

source.url = "https://example.com/extension.json"

Example

If you maintain tokio, you might add:

[package]

name = "tokio"

version = "1.0.0"

[package.metadata.symposium]

recommended = ["symposium-tokio"]

Users who depend on tokio will see “Tokio Support” suggested in their Symposium settings.

Publishing Agent Extensions

Publishing to crates.io

The simplest way to distribute an agent extension is to publish it to crates.io. Symposium can install extensions directly from crates.io using cargo binstall (for pre-built binaries) or cargo install (building from source).

To make your agent extension installable:

- Publish your crate to crates.io as usual

- Include a binary target that speaks MCP over stdio

- Optionally add

[package.metadata.symposium]for configuration (see Creating Extensions)

That’s it. Users can reference your extension by crate name, and crate authors can recommend it in their Cargo.toml (see Recommending Extensions).

Publishing to the ACP Registry (optional)

The ACP Registry is a curated catalog of extensions with broad applicability. Publishing here is appropriate for:

- General-purpose extensions like Sparkle (AI collaboration identity) that help across all projects

- Language/framework extensions that benefit many projects

- Tool integrations that aren’t tied to a specific library

For crate-specific extensions (e.g., an extension that helps with a particular library), crates.io distribution with Cargo.toml recommendations is more appropriate. Users of that library will discover the extension through the recommendation system.

Submitting to the Registry

- Fork the registry repository

- Create a directory for your extension:

my-extension/ - Add

extension.json:

{

"id": "my-extension",

"name": "My Extension",

"version": "0.1.0",

"description": "General-purpose extension for X",

"repository": "https://github.com/you/my-extension",

"license": "MIT",

"distribution": {

"cargo": {

"crate": "my-extension"

}

}

}

- Submit a pull request

Distribution Types

Extensions in the registry can specify different distribution methods:

| Type | Example | Description |

|---|---|---|

cargo | { "crate": "my-ext" } | Rust crate from crates.io |

npx | { "package": "@org/ext" } | npm package |

binary | Platform-specific archives | Pre-built binaries |

For Rust crates, cargo distribution is recommended - it leverages the existing crates.io infrastructure.

How to contribute

Symposium is an open-source project in active development. We’re iterating heavily and welcome collaborators who want to shape where this goes.

Come chat with us

The best way to get involved is to join us on Zulip. We use it for design discussions, coordination, and general conversation about AI-assisted development.

Drop in, say hello, and tell us what you’re interested in working on.

The codebase

The code lives at github.com/symposium-dev/symposium.

We maintain a code of conduct and operate as an independent community exploring what AI has to offer for software development.

Expectations

Given the exploratory nature of Symposium, expect frequent changes. APIs are unstable, and we’re still figuring out the right abstractions. This is a good time to contribute if you want to influence the direction — but be prepared for things to shift as we learn.

Implementation Overview

Symposium uses a conductor to orchestrate a dynamic chain of component proxies that enrich agent capabilities. This architecture adapts to different client capabilities and provides consistent functionality regardless of what the editor or agent natively supports.

Deployment Modes

The symposium-acp-agent binary supports several subcommands:

Run Mode (run)

The primary way to use Symposium. Reads configuration from ~/.symposium/config.jsonc:

symposium-acp-agent run

If no configuration exists, runs an interactive setup wizard. See Run Mode for details.

Run-With Mode (run-with)

For programmatic use by editor extensions. Takes explicit agent and proxy configuration:

flowchart LR

Editor --> Agent[symposium-acp-agent] --> DownstreamAgent[claude-code, etc.]

Example with agent (wraps downstream agent):

symposium-acp-agent run-with --proxy defaults --agent '{"name":"...","command":"npx",...}'

Example without agent (proxy mode, sits between editor and existing agent):

symposium-acp-agent run-with --proxy sparkle --proxy ferris

Proxy Configuration

Use --proxy <name> to specify which extensions to include. Order matters - proxies are chained in the order specified.

Known proxies: sparkle, ferris, cargo

The special value defaults expands to all known proxies:

--proxy defaults # equivalent to: --proxy sparkle --proxy ferris --proxy cargo

--proxy foo --proxy defaults --proxy bar # foo, then all defaults, then bar

If no --proxy flags are given, no proxies are included (pure passthrough).

Internal Structure

Both modes use a conductor to orchestrate multiple component proxies:

flowchart LR

Input[Editor/stdin] --> S[Symposium Conductor]

S --> C1[Component 1]

C1 --> A1[Adapter 1]

A1 --> C2[Component 2]

C2 --> Output[Agent/stdout]

The conductor dynamically builds this chain based on what capabilities the editor and agent provide.

Component Pattern

Some Symposium features are implemented as component/adapter pairs:

Components

Components provide functionality to agents through MCP tools and other mechanisms. They:

- Expose high-level capabilities (e.g., Dialect-based IDE operations)

- May rely on primitive capabilities from upstream (the editor)

- Are always included in the chain when their functionality is relevant

Adapters

Adapters “shim” for missing primitive capabilities by providing fallback implementations. They:

- Check whether required primitive capabilities exist upstream

- Provide the capability if it’s missing (e.g., spawn rust-analyzer to provide IDE operations)

- Pass through transparently if the capability already exists

- Are conditionally included only when needed

Capability-Driven Assembly

During initialization, Symposium:

- Receives capabilities from the editor - examines what the upstream client provides

- Queries the agent - discovers what capabilities the downstream agent supports

- Builds the proxy chain - spawns components and adapters based on detected gaps and opportunities

- Advertises enriched capabilities - tells the editor what the complete chain provides

This approach allows Symposium to work with minimal ACP clients (by providing fallback implementations) while taking advantage of native capabilities when available (by passing through directly).

For detailed information about the initialization sequence and capability negotiation, see Initialization Sequence.

Common Issues

This section documents recurring bugs and pitfalls to check when implementing new features.

VS Code Extension

Configuration Not Affecting New Tabs

Symptom: User changes a setting, but new tabs still use the old value.

Cause: The setting affects how the agent process is spawned, but isn’t included in AgentConfiguration.key(). Tabs with the same key share an agent process, so the new tab reuses the existing (stale) process.

Fix: Include the setting in AgentConfiguration:

- Add the setting to the

AgentConfigurationconstructor - Include it in

key()so different values produce different keys - Read it in

fromSettings()when creating configurations

Example: The symposium.extensions setting was added but new tabs ignored it until extensions were added to AgentConfiguration.key(). See commit fix: include extensions in AgentConfiguration key.

General principle: If a setting affects process behavior (CLI args, environment, etc.), it must be part of the process identity key.

Distribution

This chapter documents how Symposium is released and distributed across platforms.

Release Orchestration

Releases are triggered by release-plz, which:

- Creates a release PR when changes accumulate on

main - When merged, publishes to crates.io and creates GitHub releases with tags

The symposium-acp-agent-v* tag triggers the binary release workflow.

Distribution Channels

release-plz creates tag

↓

┌───────────────────────────────────────┐

│ GitHub Release │

│ - Binary archives (all platforms) │

│ - VSCode .vsix files │

│ - Source reference │

└───────────────────────────────────────┘

↓

┌─────────────┬─────────────┬───────────┐

│ crates.io │ VSCode │ Zed │

│ │ Marketplace │Extensions │

│ │ + Open VSX │ │

└─────────────┴─────────────┴───────────┘

crates.io

The Rust crates are published directly by release-plz. Users can install via:

cargo install symposium-acp-agent

VSCode Marketplace / Open VSX

Platform-specific extensions are built and published automatically. Each platform gets its own ~7MB extension containing only that platform’s binary.

See VSCode Packaging for details.

Zed Extensions

The Zed extension (zed-extension/) points to GitHub release archives. Publishing requires submitting a PR to the zed-industries/extensions repository.

Direct Download

Binary archives are attached to each GitHub release for direct download:

symposium-darwin-arm64.tar.gzsymposium-darwin-x64.tar.gzsymposium-linux-x64.tar.gzsymposium-linux-arm64.tar.gzsymposium-linux-x64-musl.tar.gzsymposium-windows-x64.zip

Supported Platforms

| Platform | Architecture | Notes |

|---|---|---|

| macOS | arm64 (Apple Silicon) | Primary development platform |

| macOS | x64 (Intel) | |

| Linux | x64 (glibc) | Standard Linux distributions |

| Linux | arm64 | ARM servers, Raspberry Pi |

| Linux | x64 (musl) | Static binary, Alpine Linux |

| Windows | x64 |

Secrets Required

The release workflow requires these GitHub secrets:

| Secret | Purpose |

|---|---|

RELEASE_PLZ_TOKEN | GitHub token for release-plz to create releases |

VSCE_PAT | Azure DevOps PAT for VSCode Marketplace |

OVSX_PAT | Open VSX access token |

Agent Registry

Symposium supports multiple ACP-compatible agents and extensions. Users can select from built-in defaults or add entries from the ACP Agent Registry.

The registry resolution logic lives in symposium-acp-agent and is shared across all editor integrations.

Agent Configuration

Each agent or extension is represented as an AgentConfig object:

interface AgentConfig {

// Required fields

id: string;

distribution: {

local?: { command: string; args?: string[]; env?: Record<string, string> };

symposium?: { subcommand: string; args?: string[] };

npx?: { package: string; args?: string[] };

pipx?: { package: string; args?: string[] };

cargo?: { crate: string; version?: string; binary?: string; args?: string[] };

binary?: {

[platform: string]: { // e.g., "darwin-aarch64", "linux-x86_64"

archive: string;

cmd: string;

args?: string[];

};

};

};

// Optional fields (populated from registry if imported)

name?: string; // display name, defaults to id

version?: string;

description?: string;

// ... other registry fields as needed

// Source tracking

_source?: "registry" | "custom"; // defaults to "custom" if omitted

}

Built-in Agents

Three agents ship as defaults with _source: "custom":

[

{

"id": "zed-claude-code",

"name": "Claude Code",

"distribution": { "npx": { "package": "@zed-industries/claude-code-acp@latest" } }

},

{

"id": "elizacp",

"name": "ElizACP",

"description": "Built-in Eliza agent for testing",

"distribution": { "symposium": { "subcommand": "eliza" } }

},

{

"id": "kiro-cli",

"name": "Kiro CLI",

"distribution": { "local": { "command": "kiro-cli-chat", "args": ["acp"] } }

}

]

Registry-Imported Agents

When a user imports an agent from the registry, the full registry entry is stored with _source: "registry":

{

"id": "gemini",

"name": "Gemini CLI",

"version": "0.22.3",

"description": "Google's official CLI for Gemini",

"_source": "registry",

"distribution": {

"npx": { "package": "@google/gemini-cli@0.22.3", "args": ["--experimental-acp"] }

}

}

Custom Agents

Users can manually add agents with minimal configuration:

{

"id": "my-agent",

"distribution": { "npx": { "package": "my-agent-package" } }

}

Registry Sync

For agents with _source: "registry", the extension checks for updates and applies them automatically. Agents removed from the registry are left unchanged—the configuration still works, it just won’t receive future updates.

The registry URL:

https://github.com/agentclientprotocol/registry/releases/latest/download/registry.json

Spawning an Agent

At spawn time, the extension resolves the distribution to a command (priority order):

- If

distribution.localexists →{command} {args...}with optional env vars - Else if

distribution.symposiumexists → run as symposium subcommand - Else if

distribution.npxexists →npx -y {package} {args...} - Else if

distribution.pipxexists →pipx run {package} {args...} - Else if

distribution.cargoexists → install and run Rust crate (see below) - Else if

distribution.binary[currentPlatform]exists:- Check

~/.symposium/bin/{id}/{version}/for cached binary - If not present, download and extract from

archive - Execute

{cache-path}/{cmd} {args...}

- Check

- Else → error (no compatible distribution for this platform)

Cargo Distribution

The cargo distribution installs agents/extensions from crates.io:

{

"id": "my-rust-extension",

"distribution": {

"cargo": {

"crate": "my-acp-extension",

"version": "0.1.0"

}

}

}

Resolution process:

- Version resolution: If no version specified, query crates.io for the latest stable version

- Binary discovery: Query crates.io API for the crate’s

bin_namesfield to determine the executable name - Cache check: Look for

~/.symposium/bin/{id}/{version}/bin/{binary} - Installation: If not cached:

- Try

cargo binstall --no-confirm --root {cache-dir} {crate}@{version}(uses prebuilt binaries, fast) - If binstall fails or unavailable, fall back to

cargo install --root {cache-dir} {crate}@{version}(builds from source)

- Try

- Cleanup: Delete old versions when installing a new one

The binary field is optional—if omitted, it’s discovered from crates.io. If the crate has multiple binaries, the field is required to disambiguate.

Platform Detection

Map from Node.js to registry platform keys:

process.platform | process.arch | Registry Key |

|---|---|---|

darwin | arm64 | darwin-aarch64 |

darwin | x64 | darwin-x86_64 |

linux | x64 | linux-x86_64 |

linux | arm64 | linux-aarch64 |

win32 | x64 | windows-x86_64 |

CLI Commands

The symposium-acp-agent binary provides registry subcommands:

# List all available agents (built-ins + registry)

symposium-acp-agent registry list

# Resolve an agent ID to an executable command (McpServer JSON)

symposium-acp-agent registry resolve <agent-id>

The registry list output is a JSON array of {id, name, version?, description?} objects.

The registry resolve output is an McpServer JSON object ready for spawning:

{"name":"Agent Name","command":"/path/to/binary","args":["--flag"],"env":[]}

Decisions

- Binary cleanup: Delete old versions when downloading a new one. No accumulation.

- Registry caching: Registry is cached in memory during a session and fetched fresh on first access.

Agent Extensions

Agent extensions are proxy components that enrich an agent’s capabilities. They sit between the editor and the agent, adding tools, context, and behaviors.

Built-in Extensions

| ID | Name | Description |

|---|---|---|

sparkle | Sparkle | AI collaboration identity and embodiment |

ferris | Ferris | Rust development tools (crate sources, rust researcher) |

cargo | Cargo | Cargo build and run tools |

Extension Sources

Extensions can come from multiple sources:

- built-in: Bundled with Symposium (sparkle, ferris, cargo)

- registry: Installed from the shared agent registry

- custom: User-defined via executable, npx, pipx, cargo, or URL

Distribution Types

Extensions use the same distribution types as agents (see Agent Registry):

local- executable command on the systemnpx- npm packagepipx- Python packagecargo- Rust crate from crates.iobinary- platform-specific archive download

Configuration

Extensions are passed to symposium-acp-agent via --proxy arguments:

symposium-acp-agent run-with --proxy sparkle --proxy ferris --proxy cargo --agent '...'

Order matters - extensions are applied in the order listed. The first extension is closest to the editor, and the last is closest to the agent.

The special value defaults expands to all known built-in extensions:

--proxy defaults # equivalent to: --proxy sparkle --proxy ferris --proxy cargo

Registry Format

The shared registry includes both agents and extensions:

{

"date": "2026-01-07",

"agents": [...],

"extensions": [

{

"id": "some-extension",

"name": "Some Extension",

"version": "1.0.0",

"description": "Does something useful",

"distribution": {

"npx": { "package": "@example/some-extension" }

}

}

]

}

Architecture

┌─────────────────────────────────────────────────┐

│ Editor Extension (VSCode, Zed, etc.) │

│ - Manages extension configuration │

│ - Builds --proxy args for agent spawn │

└─────────────────┬───────────────────────────────┘

│

┌─────────────────▼───────────────────────────────┐

│ symposium-acp-agent │

│ - Parses --proxy arguments │

│ - Resolves extension distributions │

│ - Builds proxy chain in order │

│ - Conductor orchestrates the chain │

└─────────────────────────────────────────────────┘

Extension Discovery and Recommendations

Symposium can suggest extensions based on a project’s dependencies. This creates a contextual experience where users see relevant extensions for their specific codebase.

Extension Source Naming

Extensions are identified using a source field with multiple options:

source.crate = "foo" # Rust crate on crates.io

source.acp = "bar" # Extension ID in ACP registry

source.url = "https://..." # Direct URL to extension.jsonc

Crate-Defined Recommendations

A crate can recommend extensions to its consumers via Cargo.toml metadata:

[package.metadata.symposium]

# Shorthand for crates.io extensions

recommended = ["foo", "bar"]

# Or explicit with full source specification

[[package.metadata.symposium.recommended]]

source.acp = "some-extension"

When Symposium detects this crate in a user’s dependencies, it surfaces these recommendations.

External Recommendations

Symposium maintains a recommendations file that maps crates to suggested extensions. This allows recommendations without requiring upstream crate changes:

[[recommendation]]

source.crate = "tokio-helper"

when-using-crate = "tokio"

[[recommendation]]

source.crate = "sqlx-helper"

when-using-crates = ["sqlx", "sea-orm"]

Users can add their own recommendation files for custom mappings.

Extension Crate Metadata

When a crate is an extension (not just recommending one), it declares runtime metadata:

[package.metadata.symposium]

binary = "my-extension-bin" # Optional: if crate has multiple binaries

args = ["--mcp", "--some-flag"] # Optional: arguments to pass

env = { KEY = "value" } # Optional: environment variables

Standard package fields (name, description, version) come from [package]. This metadata is used both at runtime and by the GitHub Action that publishes to the ACP registry.

Discovery Flow

- Symposium fetches the ACP registry (available extensions and their distributions)

- Symposium loads the recommendations file (external mappings)

- Symposium scans the user’s Cargo.lock for dependencies

- For each dependency, check:

- Does the recommendations file have an entry with matching

when-using-crate(s)? - Does the dependency’s Cargo.toml have

[package.metadata.symposium.recommended]?

- Does the recommendations file have an entry with matching

- Surface matching extensions in the UI as suggestions

Data Sources

| Source | Purpose | Controlled By |

|---|---|---|

| ACP Registry | Extension catalog + distribution info | Community |

| Symposium recommendations | External crate-to-extension mappings | Symposium maintainers |

| User recommendation files | Custom mappings | User |

| Cargo.toml metadata | Crate author recommendations | Crate authors |

Future Work

- Per-extension configuration: Add sub-options for extensions (e.g., which Ferris tools to enable)

- Extension updates: Check for and apply updates to registry-sourced extensions

Components

Symposium’s functionality is delivered through component proxies that are orchestrated by the internal conductor. Some features use a component/adapter pattern while others are standalone components.

Component Types

Standalone Components

Some components provide functionality that doesn’t depend on upstream capabilities. These components work with any editor and add features purely through the proxy layer.

Example: A component that provides git history analysis through MCP tools doesn’t need special editor support - it can work with the filesystem directly.

Component/Adapter Pairs

Other components rely on primitive capabilities from the upstream editor. For these, Symposium uses a two-layer approach:

Adapter Layer

The adapter sits upstream in the proxy chain and provides primitive capabilities that the component needs.

Responsibilities:

- Check for required capabilities during initialization

- Pass requests through if the editor provides the capability

- Provide fallback implementation if the capability is missing

- Abstract away editor differences from the component

Example: The IDE Operations adapter checks if the editor supports ide_operations. If not, it can spawn a language server (like rust-analyzer) to provide that capability.

Component Layer

The component sits downstream from its adapter and enriches primitive capabilities into higher-level MCP tools.

Responsibilities:

- Expose MCP tools to the agent

- Process tool invocations

- Send requests upstream through the adapter

- Return results to the agent

Example: The IDE Operations component exposes an ide_operation MCP tool that accepts Dialect programs and translates them into IDE operation requests sent upstream.

Component Lifecycle

For component/adapter pairs:

- Initialization - Adapter receives initialize request from upstream (editor)

- Capability Check - Adapter examines editor capabilities

- Conditional Spawning - Adapter spawns fallback if capability is missing

- Chain Assembly - Conductor wires adapter → component → downstream

- Request Flow - Agent calls MCP tool → component → adapter → editor

- Response Flow - Results flow back: editor → adapter → component → agent

Proxy Chain Direction

The proxy chain flows from editor to agent:

Editor → [Adapter] → [Component] → Agent

- Upstream = toward the editor

- Downstream = toward the agent

Adapters sit closer to the editor, components sit closer to the agent.

Current Components

Rust Crate Sources

Provides access to published Rust crate source code through an MCP server.

- Type: Standalone component

- Implementation: Injects an MCP server that exposes the

rust-crate-sourcestool - Function: Allows agents to fetch and examine source code from crates.io

Sparkle

Provides AI collaboration framework through prompt injection and MCP tooling.

- Type: Standalone component

- Implementation: Injects Sparkle MCP server with collaboration tools

- Function: Enables partnership dynamics, pattern anchors, and meta-collaboration capabilities

- Documentation: Sparkle docs

Future Components

Additional components can be added following these patterns:

- IDE Operations - Code navigation and search (likely component/adapter pair)

- Walkthroughs - Interactive code explanations

- Git Operations - Repository analysis

- Build Integration - Compilation and testing workflows

Run Mode

The run subcommand simplifies editor integration by reading agent configuration from a file rather than requiring command-line arguments.

Motivation

Without this mode, editor extensions must either:

- Hardcode specific agent commands, requiring extension updates to add new agents

- Expose complex configuration UI for specifying agent commands and proxy options

With run, the extension simply runs:

symposium-acp-agent run

The agent reads its configuration from ~/.symposium/config.jsonc, and if no configuration exists, runs an interactive setup wizard.

Configuration File

Location: ~/.symposium/config.jsonc

The file uses JSONC (JSON with comments) format:

{

// Downstream agent command (parsed as shell words)

"agent": "npx -y @zed-industries/claude-code-acp",

// Proxy extensions to enable

"proxies": [

{ "name": "sparkle", "enabled": true },

{ "name": "ferris", "enabled": true },

{ "name": "cargo", "enabled": true }

]

}

Fields

| Field | Type | Description |

|---|---|---|

agent | string | Shell command to spawn the downstream agent. Parsed using shell word splitting. |

proxies | array | List of proxy extensions with name and enabled fields. |

The agent string is parsed as shell words, so commands like npx -y @zed-industries/claude-code-acp work correctly.

Runtime Behavior

┌─────────────────────────────────────────┐

│ run │

└─────────────────┬───────────────────────┘

│

▼

┌─────────────────┐

│ Config exists? │

└────────┬────────┘

│

┌───────┴───────┐

│ │

▼ ▼

┌──────────┐ ┌──────────────┐

│ Yes │ │ No │

└────┬─────┘ └──────┬───────┘

│ │

▼ ▼

Load config Run configuration

Run agent agent (setup wizard)

When a configuration file exists, run behaves equivalently to:

symposium-acp-agent run-with \

--proxy sparkle --proxy ferris --proxy cargo \

--agent '{"name":"...","command":"npx",...}'

Configuration Agent

When no configuration file exists, Symposium runs a built-in configuration agent instead of a downstream AI agent. This agent:

- Presents a numbered list of known agents (Claude Code, Gemini, Codex, Kiro CLI)

- Waits for the user to type a number (1-N)

- Saves the configuration file with all proxies enabled

- Instructs the user to restart their editor

The configuration agent is a simple state machine that expects numeric input. Invalid input causes the prompt to repeat.

Known Agents

The configuration wizard offers these pre-configured agents:

| Name | Command |

|---|---|

| Claude Code | npx -y @zed-industries/claude-code-acp |

| Gemini CLI | npx -y -- @google/gemini-cli@latest --experimental-acp |

| Codex | npx -y @zed-industries/codex-acp |

| Kiro CLI | kiro-cli-chat acp |

Users can manually edit ~/.symposium/config.jsonc to use other agents or modify proxy settings.

Implementation

The implementation consists of:

- Config types:

SymposiumUserConfigandProxyEntrystructs insrc/symposium-acp-agent/src/config.rs - Config loading:

load()reads from~/.symposium/config.jsonc,save()writes it - Configuration agent:

ConfigurationAgentimplements the ACPComponenttrait - CLI integration:

Runvariant in theCommandenum

Dependencies

| Crate | Purpose |

|---|---|

serde_jsonc | Parse JSON with comments |

shell-words | Parse agent command string into arguments |

dirs | Cross-platform home directory resolution |

Rust Crate Sources Component

The Rust Crate Sources component provides agents with the ability to research published Rust crate source code through a sub-agent architecture.

Architecture Overview

The component uses a sub-agent research pattern: when an agent needs information about a Rust crate, the component spawns a dedicated research session with its own agent to investigate the crate sources and return findings.

Message Flow

sequenceDiagram

participant Client

participant Proxy as Crate Sources Proxy

participant Agent

Note over Client,Proxy: Initial Session Setup

Client->>Proxy: NewSessionRequest

Note right of Proxy: Adds user-facing MCP server<br/>(rust_crate_query tool)

Proxy->>Agent: NewSessionRequest (with user-facing MCP)

Agent-->>Proxy: NewSessionResponse(session_id)

Proxy-->>Client: NewSessionResponse(session_id)

Note over Agent,Proxy: Research Request

Agent->>Proxy: ToolRequest(rust_crate_query, crate, prompt)

Note right of Proxy: Create research session

Proxy->>Agent: NewSessionRequest (with sub-agent MCP)

Note right of Proxy: Sub-agent MCP has:<br/>- get_rust_crate_source<br/>- return_response_to_user

Agent-->>Proxy: NewSessionResponse(research_session_id)

Proxy->>Agent: PromptRequest(research_session_id, prompt)

Note over Agent: Sub-agent researches crate<br/>Uses get_rust_crate_source<br/>Reads files (auto-approved)

Agent->>Proxy: RequestPermissionRequest(Read)

Proxy-->>Agent: RequestPermissionResponse(approved)

Agent->>Proxy: ToolRequest(return_response_to_user, findings)

Proxy-->>Agent: ToolResponse(success)

Note right of Proxy: Response sent via internal channel

Proxy-->>Agent: ToolResponse(rust_crate_query result)

Two MCP Servers

The component provides two distinct MCP servers:

-

User-facing MCP Server - Exposed to the main agent session

- Tool:

rust_crate_query- Initiates crate research

- Tool:

-

Sub-agent MCP Server - Provided only to research sessions

- Tool:

get_rust_crate_source- Locates crate sources and returns path - Tool:

return_response_to_user- Returns research findings and ends the session

- Tool:

User-Facing Tool: rust_crate_query

Parameters

{

crate_name: string, // Name of the Rust crate

crate_version?: string, // Optional semver range (defaults to latest)

prompt: string // What to research about the crate

}

Examples

{

"crate_name": "serde",

"prompt": "How do I use the derive macro for custom field names?"

}

{

"crate_name": "tokio",

"crate_version": "1.0",

"prompt": "What are the signatures of all methods on tokio::runtime::Runtime?"

}

Behavior

- Creates a new research session via

NewSessionRequest - Attaches the sub-agent MCP server to that session

- Sends the user’s prompt via

PromptRequest - Waits for the sub-agent to call

return_response_to_user - Returns the sub-agent’s findings as the tool result

Sub-Agent Tools

get_rust_crate_source

Locates and extracts the source code for a Rust crate from crates.io.

Parameters:

{

crate_name: string,

version?: string // Semver range

}

Returns:

{

"crate_name": "serde",

"version": "1.0.210",

"checkout_path": "/Users/user/.cargo/registry/src/.../serde-1.0.210",

"message": "Crate 'serde' version 1.0.210 extracted to ..."

}

The sub-agent can then use Read tool calls (which are auto-approved) to examine the source code.

return_response_to_user

Signals completion of the research and returns findings to the waiting rust_crate_query call.

Parameters:

{

response: string // The research findings to return

}

Behavior:

- Sends the response through an internal channel to the waiting tool handler

- The original

rust_crate_querycall completes with this response - The research session can then be terminated

Permission Auto-Approval

The component implements a message handler that intercepts RequestPermissionRequest messages from research sessions and automatically approves all permission requests.

Permission Rules

- Research sessions → All permissions automatically approved

- Other sessions → Passed through unchanged

Rationale

Research sessions are sandboxed and disposable - they investigate crate sources and return findings. Auto-approving all permissions eliminates the need for dozens of permission prompts while maintaining safety:

- Research sessions operate on read-only crate sources in the cargo registry cache

- Sessions are short-lived and focused on a single research task

- Any side effects are contained within the research session’s scope

Implementation

The handler checks if a permission request comes from a registered research session and automatically selects the first available option (typically “allow”):

#![allow(unused)]

fn main() {

if self.state.is_research_session(&req.session_id) {

// Select first option (typically "allow")

let response = RequestPermissionResponse {

outcome: RequestPermissionOutcome::Selected {

option_id: req.options.first().unwrap().id.clone(),

},

meta: None,

};

request_cx.respond(response)?;

return Ok(Handled::Yes);

}

return Ok(Handled::No(message)); // Not our session, propagate unchanged

}Session Lifecycle

-

Agent calls

rust_crate_query- Handler creates

oneshot::channel()for response - Registers session in active sessions map

- Handler creates

-

Handler sends

NewSessionRequest- Includes sub-agent MCP server configuration

- Receives

session_idin response

-

Handler sends

PromptRequest- Sends user’s research prompt to the session

- Awaits response on the oneshot channel

-

Sub-agent performs research

- Calls

get_rust_crate_sourceto locate crate - Reads source files (auto-approved by permission handler)

- Analyzes code to answer the prompt

- Calls

-

Sub-agent calls

return_response_to_user- Sends findings through internal channel

- Original

rust_crate_querycall receives response

-

Session cleanup

- Remove session from active sessions map

- Session termination (if ACP supports explicit session end)

Shared State

The component uses shared state to coordinate between:

- The

rust_crate_querytool handler (creates sessions, waits for responses) - The

return_response_to_usertool handler (sends responses) - The permission request handler (auto-approves Read operations)

State Structure

#![allow(unused)]

fn main() {

struct ResearchSession {

session_id: SessionId,

response_tx: oneshot::Sender<String>,

}

// Shared across all handlers

Arc<Mutex<HashMap<SessionId, ResearchSession>>>

}Design Decisions

Why Sub-Agents Instead of Direct Pattern Search?

Previous approach: The component exposed get_rust_crate_source with a pattern parameter that performed regex searches across crate sources.

Problems:

- Agents had to construct exact regex patterns

- Limited to simple pattern matching

- No semantic understanding of code structure

- Single-shot queries couldn’t follow up on findings

Sub-agent approach:

- Agent describes what information they need in natural language

- Sub-agent can perform multiple reads, follow references, understand context

- Can navigate code structure intelligently

- Returns synthesized answers, not raw pattern matches

Why Auto-Approve All Permissions?

Research sessions need extensive file access to examine crate sources. Requiring user approval for every operation would create dozens of permission prompts, making the feature unusable.

Safety considerations:

- Research sessions are sandboxed and disposable

- Scope is limited to investigating crate sources in cargo registry cache

- Sessions are short-lived with a focused task

- Any side effects are contained within the research session

Why Oneshot Channels for Response Coordination?

Each rust_crate_query call creates exactly one research session and expects exactly one response. A oneshot::channel models this perfectly:

- Type-safe guarantee of single response

- Clear ownership transfer

- Automatic cleanup on drop

- No need to poll or maintain complex state

Integration with Symposium

The component is registered with the conductor in symposium-acp-agent/src/symposium.rs:

#![allow(unused)]

fn main() {

proxies.push(DynComponent::new(symposium_ferris::FerrisComponent::new(ferris_config)));

}The component implements Component::serve() to:

- Register the user-facing MCP server via

McpServiceRegistry - Implement message handling for permission requests

- Forward all other messages to the successor component

Future Enhancements

- Session timeouts - Terminate research sessions that take too long

- Concurrent research - Support multiple research sessions simultaneously

- Caching - Cache common queries to avoid redundant research

- Progressive responses - Stream findings as they’re discovered rather than waiting for completion

- Research history - Allow agents to reference previous research results

VSCode Extension Architecture

The Symposium VSCode extension provides a chat interface for interacting with AI agents. The architecture divides responsibilities across three layers to handle VSCode’s webview constraints while maintaining clean separation of concerns.

Components Overview

mynah-ui: AWS’s open-source chat interface library (github.com/aws/mynah-ui). Provides the chat UI rendering, tab management, and message display. The webview layer uses mynah-ui for all visual presentation.

Agent: Currently a mock implementation (HomerActor) that responds with Homer Simpson quotes. Future implementation will spawn an ACP-compatible agent process (see ACP Integration chapter when available).

Extension activation: VSCode activates the extension when the user first opens the Symposium sidebar or runs a Symposium command. The extension spawns the agent process during activation (or lazily on first use) and keeps it alive for the entire VSCode session.

Three-Layer Model

┌─────────────────────────────────────────────────┐

│ Webview (Browser Context) │

│ - mynah-ui rendering │

│ - User interaction capture │

│ - Tab management │

└─────────────────┬───────────────────────────────┘

│ VSCode postMessage API

┌─────────────────▼───────────────────────────────┐

│ Extension (Node.js Context) │

│ - Message routing │

│ - Agent lifecycle │

│ - Webview lifecycle │

└─────────────────┬───────────────────────────────┘

│ Process spawning / stdio

┌─────────────────▼───────────────────────────────┐

│ Agent (Separate Process) │

│ - Session management │

│ - AI interaction │

│ - Streaming responses │

└─────────────────────────────────────────────────┘

Why Three Layers?

Webview Isolation

VSCode webviews run in isolated browser contexts without Node.js APIs. This security boundary prevents direct file system access, process spawning, or network operations. The webview can only communicate with the extension through VSCode’s postMessage API.

Design consequence: UI code must be pure browser JavaScript. All privileged operations (spawning agents, workspace access, persistence) happen in the extension layer.

Extension as Coordinator

The extension runs in Node.js with full VSCode API access. It bridges between the isolated webview and external agent processes.

Key responsibilities:

- Message routing - Translates between webview UI events and agent protocol messages

- Agent lifecycle - Spawns and manages the agent process

- Webview lifecycle - Handles visibility changes and ensures messages reach the UI

The extension deliberately avoids understanding message semantics. It routes based on IDs (tab ID, message ID) without interpreting content.

Agent Independence

The agent runs as a separate process communicating via stdio. This isolation provides:

- Flexibility - Agent can be any executable (Rust, Python, TypeScript)

- Stability - Agent crashes don’t kill the extension

- Multiple sessions - Single agent process handles all tabs/conversations

The agent owns all session state and conversation logic. The extension only tracks which tab corresponds to which session.

Communication Boundaries

Webview ↔ Extension

Transport: postMessage API (asynchronous, JSON-serializable messages only)

Direction:

- Webview → Extension: User actions (new tab, send prompt, close tab)

- Extension → Webview: Agent responses (response chunks, completion signals)

Why not synchronous? VSCode’s webview API is inherently asynchronous. This forces the UI to be resilient to message delays and webview lifecycle events.

Extension ↔ Agent

Transport: ACP (Agent Client Protocol) over stdio

Direction:

- Extension → Agent: Session commands (new session, process prompt)

- Agent → Extension: Streaming responses, session state updates

Why ACP over stdio? ACP provides a standardized protocol for agent communication. Stdio is simple, universal, and works with any language. No need for network sockets or IPC complexity.

Agent Configuration and Sharing

The extension uses AgentConfiguration to determine when agent processes can be shared across tabs. An AgentConfiguration consists of:

- Agent name (e.g., “ElizACP”, “Claude”)

- Enabled components (e.g., “symposium-acp”)

- Workspace folder (the VSCode workspace the agent operates in)

Sharing strategy: Tabs with identical configurations share the same agent actor (process), but each tab gets its own session within that process.

Workspace folder selection:

- Single workspace: Automatically uses that workspace

- Multiple workspaces: Prompts user to select which workspace folder to use

- Each session is created with the workspace folder as its working directory

Rationale:

- Resource efficiency - Shared actor means one process for multiple tabs with the same config

- Workspace isolation - Different workspace folders get different actors to maintain proper working directory context

- Session isolation - Each tab gets its own session ID for conversation independence

Trade-off: Agent must implement multiplexing. Messages include session/tab IDs for routing. Extension maps UI tab IDs to agent session IDs.

Design Principles

Opaque state: Each layer owns its state format. Extension stores but doesn’t parse webview UI state or agent session state.

Graceful degradation: Webview can be hidden/shown at any time. Extension buffers messages when webview is inactive.

UUID-based identity: Tab IDs and message IDs use UUIDs to avoid collisions. Generated at source (webview generates tab IDs, extension generates message IDs) to eliminate coordination overhead.

Minimal coupling: Layers communicate through well-defined message protocols. Webview doesn’t know about agents. Agent doesn’t know about webviews. Extension coordinates without understanding semantics.

End-to-End Flow

Here’s how a complete user interaction flows through the system:

sequenceDiagram

participant User

participant VSCode

participant Extension

participant Webview

participant Agent

User->>VSCode: Opens Symposium sidebar

VSCode->>Extension: activate()

Extension->>Extension: Generate session ID

Extension->>Agent: Spawn process

Extension->>Webview: Create webview (inject session ID)

Webview->>Webview: Load, check session ID vs saved state

Webview->>Webview: Restore or clear tabs, initialize mynah-ui

Webview->>Extension: webview-ready (last-seen-index)

User->>Webview: Creates new tab

Webview->>Webview: Generate tab UUID

Webview->>Extension: new-tab (tabId)

Extension->>Agent: new-session

Agent->>Agent: Initialize session

Agent->>Extension: session-created (sessionId)

Extension->>Extension: Store tabId ↔ sessionId mapping

User->>Webview: Sends prompt

Webview->>Webview: Generate message UUID

Webview->>Extension: prompt (tabId, messageId, text)

Extension->>Extension: Lookup sessionId for tabId

Extension->>Agent: process-prompt (sessionId, text)

loop Streaming response

Agent->>Extension: response-chunk (sessionId, chunk)

Extension->>Extension: Lookup tabId for sessionId

Extension->>Webview: response-chunk (tabId, messageId, chunk)

Webview->>Webview: Render chunk in mynah-ui

end

Agent->>Extension: response-complete (sessionId)

Extension->>Webview: response-complete (tabId, messageId)

Webview->>Webview: End message stream

Webview->>Webview: setState() - persist session ID and tabs

The extension maintains tab↔session mappings and handles webview visibility, while the agent maintains session state and generates responses.

See also: Common Issues for recurring bug patterns.

Message Protocol

The extension coordinates message flow between the webview UI and agent process. Messages are identified by UUIDs and routed based on tab/session mappings.

Message Identity

The system uses two separate identification mechanisms:

Message IDs (UUIDs): Identify specific prompt/response conversations. When a user sends a prompt, the webview generates a UUID message ID. All response chunks for that prompt include the same message ID, allowing the UI to associate chunks with the correct prompt and render them in the right place. Message IDs enable multiple concurrent prompts (user sends prompt in tab A while tab B is still streaming a response).

Message indices (numbers): Monotonically increasing integers assigned by the extension per tab, used exclusively for deduplication. When the webview is hidden and shown, the extension may replay messages to ensure nothing was missed. The webview tracks the last index it saw per tab (via lastSeenIndex map) and ignores messages with index <= lastSeenIndex[tabId]. This prevents duplicate response chunks from appearing in the UI.

Why both? Message IDs provide semantic identity (“which conversation is this?”). Message indices provide delivery tracking (“have I seen this before?”). The extension assigns indices sequentially as messages flow through; the webview uses UUIDs for UI routing and indices for deduplication.

Message Flow Patterns

Opening a New Tab

sequenceDiagram

participant User

participant Webview

participant Extension

participant Agent

User->>Webview: Opens new tab

Webview->>Webview: Generate tab ID (UUID)

Webview->>Extension: new-tab (tabId)

Extension->>Agent: new-session

Agent->>Agent: Initialize session

Agent->>Extension: session-created (sessionId)

Extension->>Extension: Store tabId → sessionId mapping

Why UUID generation in webview? The webview owns tab lifecycle. Generating IDs at the source avoids round-trip coordination with the extension.

Why separate session IDs? The agent owns session identity. Tab IDs are UI concepts; session IDs are agent concepts. The extension maps between them without understanding either.

Sending a Prompt

sequenceDiagram

participant User

participant Webview

participant Extension

participant Agent

User->>Webview: Types message

Webview->>Extension: prompt (tabId, messageId, text)

Extension->>Extension: Lookup sessionId for tabId

Extension->>Agent: process-prompt (sessionId, text)

loop Streaming response

Agent->>Extension: response-chunk (sessionId, chunk)

Extension->>Extension: Lookup tabId for sessionId

alt Webview visible

Extension->>Webview: response-chunk (tabId, messageId, chunk)

Webview->>Webview: Append to message stream

else Webview hidden

Extension->>Extension: Buffer message

end

end

Agent->>Extension: response-complete (sessionId)

Extension->>Webview: response-complete (tabId, messageId)

Webview->>Webview: End message stream

Why streaming? AI responses can take seconds to complete. Streaming provides immediate feedback and allows users to start reading while generation continues.

Why message IDs? Multiple prompts can be in flight simultaneously (user sends prompt in tab A while tab B is still receiving a response). Message IDs ensure response chunks are associated with the correct prompt.

Why buffer when hidden? VSCode can hide webviews at any time (user switches away, collapses sidebar). Buffering ensures the UI sees all messages when it becomes visible again.

Closing a Tab

sequenceDiagram

participant User

participant Webview

participant Extension

participant Agent

User->>Webview: Closes tab

Webview->>Extension: close-tab (tabId)

Extension->>Extension: Lookup sessionId for tabId

Extension->>Agent: close-session (sessionId)

Agent->>Agent: Cleanup session state

Extension->>Extension: Remove tabId → sessionId mapping

Why explicit close messages? Allows agent to clean up resources (free memory, close file handles) rather than leaking session state indefinitely.

Message Identification Strategy

Tab IDs

- Generated by: Webview (when user creates new tab)

- Format: UUID v4

- Scope: UI-only concept

- Lifetime: From tab creation to tab close

Session IDs

- Generated by: Agent (in response to new-session)

- Format: Agent-defined (typically UUID)

- Scope: Agent-only concept

- Lifetime: From session creation to session close

Message IDs

- Generated by: Webview (when user sends prompt)

- Format: UUID v4

- Scope: Used by both webview and extension for response routing

- Lifetime: From prompt send to response complete

Why three separate ID spaces? Each layer owns its identity domain. This avoids coupling and eliminates coordination overhead.

Bidirectional Mapping

The extension maintains two maps:

tabId → sessionId (for extension → agent messages)

sessionId → tabId (for agent → extension messages)

Synchronization: Maps are updated atomically when session creation completes. Both directions always stay consistent.

Cleanup: Both mappings are removed when either tab closes or session ends.

Message Ordering Guarantees

Within a session: Agent processes prompts sequentially. A second prompt won’t start processing until the first response completes.

Across sessions: No ordering guarantees. Tabs are independent. Multiple sessions can stream responses simultaneously.

Webview messages: Delivered in order sent, but delivery timing depends on webview visibility. Buffered messages are replayed in order when webview becomes visible.

Error Handling

Agent crashes: Extension detects process exit, notifies all active tabs. Tabs display error state. User can trigger agent restart.

Webview disposal: Extension maintains agent sessions. If webview is recreated (VSCode restart), extension can restore tab → session mappings and continue existing sessions.

Message delivery failure: If webview is disposed while messages are buffered, messages are discarded. Agent sessions may continue running. Next webview instantiation can restore session state.

Design Rationale

Why not request/response? Streaming responses require continuous message flow, not single request/reply pairs. The protocol is inherently asynchronous.

Why not share IDs across layers? Each layer has different lifecycle concerns. Decoupling identity spaces allows independent evolution. Extension acts as impedance matcher between UI tab identity and agent session identity.

Why buffer in extension instead of agent? Agent shouldn’t need to know about webview lifecycle. Extension handles VSCode-specific concerns (visibility, disposal) to keep agent implementation portable.

Tool Use Authorization

When agents request permission to execute tools (file operations, terminal commands, etc.), the extension provides a user approval mechanism. This chapter describes how authorization requests flow through the system and how per-agent policies are enforced.

Architecture

The authorization flow bridges three layers:

Agent (ACP requestPermission) → Extension (Promise-based routing) → Webview (MynahUI approval card)

The extension acts as the coordination point:

- Receives synchronous

requestPermissioncallbacks from the ACP agent - Checks per-agent bypass settings

- Routes approval requests to the webview when user input is needed

- Blocks the agent using promises until the user responds

Authorization Flow

With Bypass Disabled

sequenceDiagram

participant Agent

participant Extension

participant Settings

participant Webview

participant User

Agent->>Extension: requestPermission(toolCall, options)

Extension->>Settings: Check agents[agentName].bypassPermissions

Settings-->>Extension: false

Extension->>Extension: Generate approval ID, create pending promise

Extension->>Webview: approval-request message

Webview->>User: Display approval card (MynahUI)

User->>Webview: Click approve/deny/bypass

Webview->>Extension: approval-response message

alt User selected "Bypass Permissions"

Extension->>Settings: Set agents[agentName].bypassPermissions = true

end

Extension->>Extension: Resolve promise with user's choice

Extension-->>Agent: return RequestPermissionResponse

With Bypass Enabled

sequenceDiagram

participant Agent

participant Extension

participant Settings

Agent->>Extension: requestPermission(toolCall, options)

Extension->>Settings: Check agents[agentName].bypassPermissions

Settings-->>Extension: true

Extension-->>Agent: return allow_once (auto-approved)

Promise-Based Blocking

The ACP SDK’s requestPermission callback is synchronous - it must return a Promise<RequestPermissionResponse>. The extension creates a promise that resolves when the user responds:

async requestPermission(params) {

// Check bypass setting first

if (agentConfig.bypassPermissions) {

return { outcome: { outcome: "selected", optionId: allowOptionId } };

}

// Create promise that will resolve when user responds

const promise = new Promise((resolve, reject) => {

pendingApprovals.set(approvalId, { resolve, reject, agentName });

});

// Send request to webview

sendToWebview({ type: "approval-request", approvalId, ... });

// Return promise (blocks agent until resolved)

return promise;

}

When the webview sends approval-response, the extension resolves the promise:

case "approval-response":

const pending = pendingApprovals.get(message.approvalId);

pending.resolve(message.response); // Unblocks agent

This allows the agent to block on permission requests without blocking the extension’s event loop.

Per-Agent Settings

Authorization policies are scoped per-agent in symposium.agents configuration:

{

"symposium.agents": {

"Claude Code": {

"command": "npx",

"args": ["@zed-industries/claude-code-acp"],

"bypassPermissions": true

},

"ElizACP": {

"command": "elizacp",

"bypassPermissions": false

}

}

}

Why per-agent? Different agents have different trust levels. A user might trust Claude Code with unrestricted file access but want to review every tool call from an experimental agent.

Scope: Settings are stored globally (VSCode user settings), so bypass policies persist across workspaces and sessions.

User Approval Options

When bypass is disabled, the webview displays three options:

- Approve - Allow this single tool call, continue prompting for future tools

- Deny - Reject this single tool call, continue prompting for future tools

- Bypass Permissions - Approve this call AND set

bypassPermissions = truefor this agent permanently

The “Bypass Permissions” option provides a quick path to trusted status without requiring manual settings edits.

Webview UI Implementation

The webview uses MynahUI primitives to display approval requests:

- Chat item - Approval request appears as a chat message in the conversation

- Buttons - Three buttons (Approve, Deny, Bypass) using MynahUI’s button status colors

- Tool details - Tool name, parameters (formatted as JSON), and any available metadata

- Card dismissal - Cards auto-dismiss after the user clicks a button (

keepCardAfterClick: false)

The specific MynahUI API usage is documented in the MynahUI GUI reference.

Approval Request Message

Extension → Webview:

{

type: "approval-request",

tabId: string,

approvalId: string, // UUID for matching response

agentName: string, // Which agent is requesting permission

toolCall: {

toolCallId: string, // ACP tool call identifier

title?: string, // Human-readable tool name (may be null)

kind?: ToolKind, // "read", "edit", "execute", etc.

rawInput?: object // Tool parameters

},

options: PermissionOption[] // Available approval options from ACP

}

Approval Response Message

Webview → Extension:

{

type: "approval-response",

approvalId: string, // Matches approval-request

response: {

outcome: {

outcome: "selected",

optionId: string // Which option was chosen

}

},

bypassAll: boolean // True if "Bypass Permissions" clicked

}

Design Decisions

Why block the agent? Tool execution should wait for user consent. Continuing execution while waiting for approval would allow the agent to make progress on non-tool operations, potentially creating race conditions where the user approves a tool call that’s no longer relevant.

Why promise-based? JavaScript promises provide natural blocking semantics. The extension can return immediately (non-blocking event loop) while the agent perceives the call as synchronous (blocking until approval).

Why store in settings? Bypass permissions should persist across sessions. VSCode settings provide durable storage with UI for manual editing if needed.

Why auto-dismiss cards? Once the user responds, the approval card is no longer actionable. Dismissing it keeps the conversation history clean and focused on the actual work.

Future Enhancements

Potential extensions to the authorization system:

- Per-tool policies - Trust specific tools (e.g., “always allow Read”) while prompting for others

- Resource-based rules - Auto-approve file reads within certain directories

- Temporary sessions - “Bypass for this session” option that doesn’t persist

- Approval history - Log of past approvals for security auditing

- Batch approvals - Approve multiple pending tool calls at once

Webview State Persistence

The webview must preserve chat history and UI state across hide/show cycles, but clear state when VSCode restarts. This requires distinguishing between temporary hiding and permanent disposal.

The Problem

VSCode webviews face two distinct lifecycle events that look identical from the webview’s perspective:

- User collapses sidebar - Webview is hidden but should restore exactly when reopened

- VSCode restarts - Webview is disposed and recreated, should start fresh

Both events destroy and recreate the webview DOM. The webview cannot distinguish between them without additional context.

User expectation: Chat history persists within a VSCode session but doesn’t carry over to the next session. Draft text should survive sidebar collapse but not VSCode restart.

Session ID Solution

The extension generates a session ID (UUID) once per VSCode session at activation. This ID is embedded in the webview HTML as a global JavaScript variable (window.SYMPOSIUM_SESSION_ID) in a script tag. The webview reads this variable synchronously on load and compares it against the session ID stored in saved state.

sequenceDiagram

participant VSCode

participant Extension

participant Webview

Note over VSCode: Extension activation

Extension->>Extension: Generate session ID

Note over VSCode: User opens sidebar

Extension->>Webview: Create webview with session ID

Webview->>Webview: Load saved state

alt Session IDs match

Webview->>Webview: Restore chat history

else Session IDs don't match (or no saved ID)

Webview->>Webview: Clear state, start fresh

end

Why this works: